ince early 2025, the emergence of AI has reshaped daily life. Yet while generative AI tools can serve as powerful personal consultants, they often produce misleading or entirely false information that appears highly convincing.

This phenomenon, called AI hallucination, occurs when large language models (LLMs) perceive patterns or objects that do not actually exist, producing outputs that are nonsensical or altogether inaccurate.

AI is also used in scams, including deepfakes, where a person’s likeness is convincingly inserted into images or videos.

To address these risks, China issued a new regulation on mandatory labeling of AI-generated content (AGC) on March 14, which will take effect on September 1.

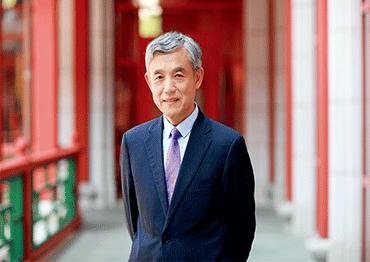

Given the rising complexity of AI-human interaction, it is vital to continually update governance frameworks based on real-world scenarios, argues Professor Xue Lan of Tsinghua University, who also serves as director of the National New Generation AI Governance Professional Committee.

In an interview with NewsChina, he stressed the importance of achieving the right balance between advancing AI technology and managing its risks, and protecting privacy, labor rights and innovation.

NewsChina: AI hallucinations can have serious consequences, especially in high-stakes fields like medicine and law. As AI becomes more widely applied, how should we address the risks associated with hallucinations?

Xue Lan: Although AI hallucinations can’t be completely eliminated as of now, there is significant room for technical improvement. We can reduce the likelihood of AI hallucinations through measures such as improving data quality, optimizing model architectures and strengthening system-level controls.

The government should formulate a set of AI safety standards and regulatory systems. Before a large-scale AI system is introduced into real-world scenarios, it is necessary to first test whether the system meets safety requirements. This is particularly necessary in sensitive fields that affect human lives, such as healthcare. Of course, testing standards must closely align with specific application scenarios.

It is crucial to strike a rational balance between the benefits and risks of technology when formulating laws and regulations. For example, in the medical field, if AI’s diagnostic accuracy exceeds that of doctors, should decisions be based solely on AI systems rather than allowing doctors to be involved in the decision-making process? If a serious medical error is caused by AI hallucinations, who should be held accountable, the hospital or the AI developer?

Rather than simply choosing between humans and machines, we should consider human-machine collaboration. Even if the accuracy of AI surpasses that of humans, doctors should still be involved in the final diagnosis. In cases when accountability is unclear, a feasible solution is to provide professional insurance for both AI developers and users [such as doctors].

We can refer to how adverse vaccine reactions are handled. Vaccines play a crucial role in disease prevention and control. However, after vaccination, a very small number of people may experience adverse reactions, which in some extreme cases may result in death. Some countries have established vaccine injury compensation funds to support those affected. For AI applications, technical risk funds or social insurance systems can be set up to prevent developers and users from shouldering the risks alone.

NC: Nearly 90 percent of enterprises deploying DeepSeek do not adopt any security measures, according to Qi An Xin, a Beijing-based internet security and service provider. What are the risks and how should they be addressed?

XL: First, if enterprises do not take measures to address data security issues after deploying large language models, there is a risk of internal data leaks. In particular, some enterprises require large computing resources and have to rely on public cloud platforms. Without security measures, data can be leaked, tampered with or even exploited by competitors.

Second, there are application risks. As mentioned earlier, if there are problems with the data used by an AI system, they may exacerbate hallucinations and even endanger the lives of patients. Therefore, enterprises using open-source large models and targeting mass consumers must take the initiative to formulate higher internal technical review standards and strictly monitor the application of the technology.

NC: What are the core challenges facing AI governance?

XL: An important bottleneck for AI governance in China is the lack of high-quality data. AI needs vast amounts of training data to enhance its capabilities. The more and the better the data, the stronger the system’s capabilities become, and AI hallucinations will be less likely.

In China, there are relatively few standardized data service providers. Although the volume of data is large, its quality is rather low. Therefore, constructing a complete and mature data market is an issue that we urgently need to address. The rapid development of the data industry can support large-scale implementation of AI applications.

Another significant challenge is data privacy. In fields like autonomous driving, it is relatively easy to collect data. But in areas like healthcare and education, it is much more difficult.

When it comes to specific application scenarios and personal data privacy issues, all sorts of resistance may be encountered in the process of data acquisition. Striking a balance between data collection and privacy protection is a key challenge for AI governance.

The government holds a large amount of public data covering all aspects of social life, which is of great value for AI training. Currently, the degree of openness of public data is insufficient. As AI becomes more widely adopted, governments at all levels need to consider further expanding data openness on the grounds of ensuring security.

NC: How can we adopt more targeted governance measures tailored to different AI application scenarios?

XL: The interaction between AI and humans is extremely complex. In particular, there is a significant difference between the application of AI in daily life scenarios and its application in industrial scenarios. In industrial scenarios, most of the additional costs caused by AI errors can be anticipated and analyzed in advance, making it easier to formulate corresponding solutions. However, in contrast to mechanized production systems, human emotions and responses vary, and the ways people interact with AI systems are diverse. When AI is applied to services involving people, it encounters a great deal of uncertainty.

Take education for example. Using AI in student management is a trend. Some schools have introduced AI classroom behavior management systems, which observe subtle changes in students’ facial expressions through cameras, then judge whether the students are actively engaged. Parents have expressed different opinions on this issue. Some think this infringes on their children’s privacy, while others believe it can help students improve academically. Some education authorities argue that as classrooms are public places, privacy infringement isn’t an issue. This is a typical governance problem raised by AI in education. It is hard for people to agree on such controversial issues.

Consider another example from the medical field. What if a patient is in a bad mood while describing their symptoms and behaves unexpectedly? Could this lead to a misdiagnosis by AI? Can AI take into account non-medical factors when making diagnoses? These issues all require careful consideration and should be addressed in governance plans.

In the future, we are likely to encounter a variety of complex, unpredictable problems. This is why I have always emphasized a rapid response to governance in the AI era. As applications are rolled out, we should introduce policies and pilot them at the same time. Through this, we can explore and discover new problems before making modifications to improve oversight systems as we go. We should not expect to settle everything all at once. What is more crucial is how to strike an effective balance between technological development, risk management and governance models.

NC: As AI enters its “golden age” and drives higher productivity, there are concerns it will displace workers. How should we manage the unemployment risks posed by large-scale AI adoption?

XL: AI significantly cuts labor costs, particularly in intellectual work, posing a major threat to white-collar employment. If these changes happen too rapidly, they could easily trigger social unrest. Therefore, the government urgently needs to address two key issues: facilitating the stable rollout of AI applications and establishing employment safeguards for vulnerable groups during the transformation.

Take the controversy surrounding Baidu’s Apollo Go Robotaxi in Wuhan (which drew complaints from taxi drivers). This highlighted the need for local governments to implement well-crafted policies. These policies should be designed to guide the development of autonomous driving technology and mitigate any potential negative impact on employment within the industry. For example, taxi drivers often decline trips to remote areas. Introducing autonomous driving technology in such specific scenarios can reduce its short-term impact on employment.

Our next step is to innovate in how we govern society to adapt to the social impacts brought by AI. Balancing the risks and benefits of AI requires rational public discussion. The ultimate goal is to reach a broad consensus, not just among experts and government, but across all levels of society. This inclusive consensus will ensure that AI development aligns with the collective interests and values of the entire population.

NC: With AI being implemented on a large scale, what roles do governments and enterprises play in AI governance? How can their cooperation be improved?

XL: When we talk about governance, this term is often interpreted as the government regulating enterprises. But in the field of AI, both the government and enterprises find it difficult to foresee the potential risks that may arise during technological development and application. Their relationship should no longer be the traditional cat-and-mouse dynamic. Instead, there is a pressing need to enhance two-way communication and cooperation, so both can identify and address these risks.

The government should maintain an open attitude and proactively engage and negotiate with enterprises. Companies can also play an important role in AI governance, and take more initiative in self-regulation and flag potential risks to authorities. Some major tech firms are relatively proactive in AI governance. Alibaba and Tencent have established internal AI ethics committees and conduct ethical reviews of their own products.

However, with the widespread adoption of DeepSeek, more small- and medium-sized enterprises are entering the AI space. Whether these smaller players have the capacity to engage in governance and establish mechanisms on par with leading enterprises remains to be seen. In the future, there will be a pressing need to foster consensus on governance at the industry level.

NC: Does this mean we could see a few leading companies monopolize global AI technologies?

XL: Monopolies have always existed in the tech world. For example, the manufacturing of large civilian aircraft is still dominated by a few companies. A similar situation may arise with AI in the future.

However, the emergence of DeepSeek shows that, at least for now, the AI industry still holds great potential for innovation. It is not easy for any single company or country to maintain a dominant position. DeepSeek has also lowered the technological threshold, enabling more enterprises to engage in LLM applications. We can hardly predict the future market landscape.

Of course, technological hegemony and monopoly are issues that require our attention. Currently, there are two main types of regulation when it comes to technology. One is social regulation, which focuses on managing potential risks. The other is market regulation, which involves issues such as dealing with monopoly. As AI has not yet reached large-scale application, AI governance is primarily concentrated on mitigating potential risks to society. But it will be crucial to apply anti-monopoly laws and regulations rationally. If a few enterprises monopolize technology and harm markets or public interests, regulations must be promptly enforced.

Old Version

Old Version